📘 Why Log Parsing is Important in Datadog?

When your apps or microservices send logs to Datadog, sometimes the log is just plain text or raw JSON. If you want to search, filter, and monitor specific values like objectId, eventId, or sourceId, you need to extract those values first. This is called log parsing.

Parsing helps you:

- Easily filter logs by key values (e.g., source ID or change flag)

- Build better dashboards and monitors

- Save time debugging by reading structured logs

- Avoid confusion when logs are mixed or nested

In this blog, I will show how I parsed a custom log using Grok rules inside Datadog using my real example.

🧪 Sample Logs I Want to Parse

Log 1 – JSON inside a list:

Received deleted object: [{"eventId": 1234567890, "subscriptionId": 11223344, "portalId": 55667788, "appId": 990011, "occurredAt": 1717171717171, "subscriptionType": "user.deletion", "attemptNumber": 1, "objectId": 9876543210, "changeFlag": "REMOVED", "changeSource": "MANUAL_DELETE", "sourceId": "userRef:111222"}]

Log 2 – Plain JSON object:

Received deleted object: {"eventId": 1234567890, "subscriptionId": 11223344, "portalId": 55667788, "appId": 990011, "occurredAt": 1717171717171, "subscriptionType": "user.deletion", "attemptNumber": 1, "objectId": 9876543210, "changeFlag": "REMOVED", "changeSource": "MANUAL_DELETE", "sourceId": "userRef:111222"}

⚙️ Step-by-Step: Creating Parsing Rules in Datadog

1. Go to Log Pipelines

- In Datadog UI, go to Logs > Configuration > Pipelines

- Create a new pipeline or open existing one

2. Add a Grok Parser

- Add a new Grok Parser

- Then insert these parsing rules:

Rule1 Received deleted object: %{data:DeletedObject:json}

Rule2 Received deleted object: \[%{data:DeletedObject:json}\]💡 Explanation:

Rule1matches Log 2 (plain JSON)Rule2matches Log 1 (JSON in list format)- Both extract the full JSON object into the field called

DeletedObject

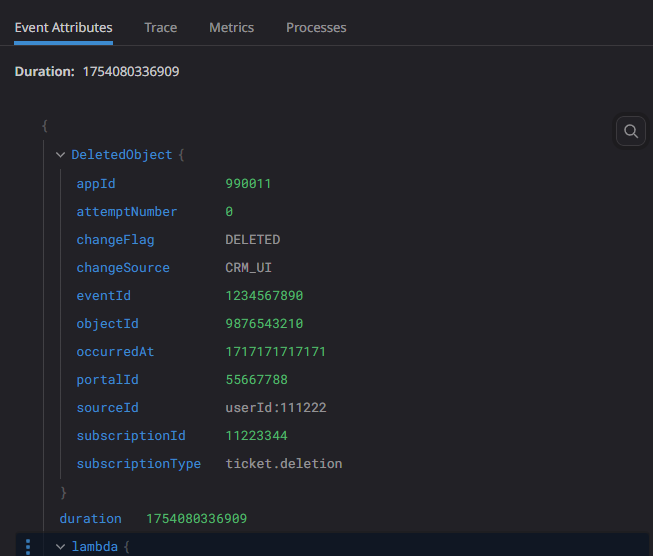

🧾 Parsed Output in Datadog

After parsing, this is what Datadog shows:

{

"DeletedObject": {

"eventId": 1234567890,

"subscriptionId": 11223344,

"portalId": 55667788,

"appId": 990011,

"occurredAt": 1717171717171,

"subscriptionType": "user.deletion",

"attemptNumber": 1,

"objectId": 9876543210,

"changeFlag": "REMOVED",

"changeSource": "MANUAL_DELETE",

"sourceId": "userRef:111222"

}

}

This output is now easy to work with in:

- Monitors (e.g. alert when

changeFlag = DELETED) - Queries (e.g. find logs with

sourceId= specific value) - Dashboards (e.g. count how many deletions happen per hour)

Screenshot Sample:

🔗 Additional Resources

🙌 Conclusion

Parsing logs is very important for readability, searchability, and automation inside Datadog. Even if your logs are not structured well, you can use Grok parsing rules to extract exactly what you need.

This simple trick helped me organize our deletion logs and track important changes better. Try it in your own app logs and enjoy clean dashboards!